Building on the shoulders of giants

Artificial Intelligence (AI) has a rich history spanning over half a century. It has played a pivotal role in advancing digital technology and in the creation of major technology companies. Initially emerging during World War II as a code-breaking tool, AI has since been integral in various fields, including robotics, recognition technologies, and more recently, large language models (LLMs) and generative AI. Generative AI has brought AI into the mainstream, much like the Internet and personal computers did in previous decades. However, AI's capabilities are often misunderstood, with current AI solutions being "Narrow-AI" rather than the more ambitious "General-AI." The journey toward General-AI remains a topic of debate among experts. Historically, AI has faced periods of stagnation, such as the "AI Winter" of the 1970s. But breakthroughs in computing power and neural networks, particularly with the rediscovery of modern backpropagation techniques in the 1980s, have propelled AI forward. The rise of the Internet in the 2000s and 2010s further amplified AI's impact, with companies like Google and Facebook relying heavily on AI. Today, the focus is on LLMs, which leverage the vast corpus of Internet text for training and the efficiency and universality of language, leading to high-fidelity solutions like ChatGPT. Despite the high costs and energy demands of training these models, the market for generative AI is booming, with forecasts predicting it to exceed $1 trillion in the next decade. As AI continues to evolve, companies must navigate challenges such as data management, privacy, and scalability, drawing lessons from past technological bubbles to harness AI's potential responsibly.

The 2014 film The Imitation Game depicts Alan Turing's revolutionary contributions during World War II, focusing on his creation of the "Bombe," a device that effectively cracked Germany's Enigma code. Turing's innovative work and later research were instrumental in establishing the foundations of artificial intelligence (AI). Concurrently, neuroscience researchers and mathematicians such as Warren McCulloch and Walter Pitts were formulating brain models that would eventually support the development of neural networks and reinforcement learning.

In a 1950 research paper, Alan Turing introduced an experiment he called "The Imitation Game" to investigate the question, "Can machines think?" In this scenario, a computer replaces one of the players in a multiplayer game. If the computer is indistinguishable from the human participants, Turing suggested it demonstrates human-like cognitive abilities. This experiment became famously known as the Turing Test, a benchmark for assessing a machine's ability to display intelligent behavior equivalent to, or indistinguishable from, that of a human. In 2014, Google's AI passed the Turing Test with its chatbot named 'Eugene Goostman.' More recently, Large Language Models (LLMs) and Generative AI solutions developed by companies such as OpenAI, Google, and Meta have also passed the test.

While numerous AI solutions have been developed over the years, the industry's success has relied on significant technological breakthroughs. Interest in AI surged during the 1950s and 1960s as researchers explored AI concepts and developed solutions to leverage emerging computing technologies. Notable milestones include:

AI stagnated during the 1970s and early 1980s, a period often referred to as the 'First AI Winter.' During this time, AI research did not meet the high expectations set by early successes, largely due to limitations in computer performance and scalability. However, the 1980s saw a resurgence in AI development, driven by significant improvements in computing capabilities and several key breakthroughs, which included:

After 1986, ANN models became feasible for practical use, although they were costly and mainly suited for specialized applications. A notable example occurred in 1996 when ANNs gained significant attention as the IBM supercomputer Deep Blue won two chess matches against Garry Kasparov, the reigning world champion at the time.

The ANN architecture that was pioneered by Warren McCulloch and Walter Pitts in the 1940's and others like Frank Rosenblatt in the 1950's is compelling because of its similarity to simplified brain models. These simplified models characterize the brain as an extensive 2D grid of interconnected neurons. Each neuron has an axon, which transmits electrical impulses to other neurons via synapses and dendrites. The strength of these impulses is influenced by the signal produced by the axons and the resistance (attenuation) provided by the synapses, which adapt as the brain undergoes training.

ANN models can be likened to electrical circuits where grids of interconnected operational amplifiers and resistors represent axons and synapses, respectively. The impedance of each resistor can be modified (tuned) according to training data to ensure the ANN produces the desired outputs for the given inputs in the training dataset. A similar analogy applies to the pixels in a 2D image generated by a graphics processing unit (GPU).

Massive ANNs are needed for practical solutions. For example, the LLM used by OpenAI to train GPT–4 has 100 billion neurons (over 100 layers) and 100 trillion synapses. To tune an ANN, the synapses (weights) must be calibrated in unison, which would ordinarily create an exponential increase in computing requirements with naÏve approaches. This proves untenable even for modestly sized ANNs and was a barrier for meaningful ANN implementations without modern backpropagation techniques.

During the 2000s and 2010s, the widespread adoption of the Internet, social networks, and digital technologies significantly enhanced the market potential and economic value of AI. In this era, tech giants like Google (now Alphabet) and Facebook (now Meta) relied heavily on AI for their operations, while major technology companies such as IBM and Microsoft made substantial investments in AI. Since then, breakthroughs in deep learning have yielded significant benefits, especially in areas like language processing, computer vision, autonomous systems and robotics, cybersecurity, prediction, forecasting, and condition monitoring. A landmark achievement of this period was in 2016 when DeepMind, a subsidiary of Google, defeated top Go players with its AlphaGo platform.

OpenAI was founded in December 2015 as a non-profit research initiative with the ambitious aim of advancing digital intelligence in a manner that would most likely benefit humanity. At that time, the founders could not have anticipated what OpenAI would evolve into seven years later, with the launch of the ChatGPT Generative AI solution in November 2022.

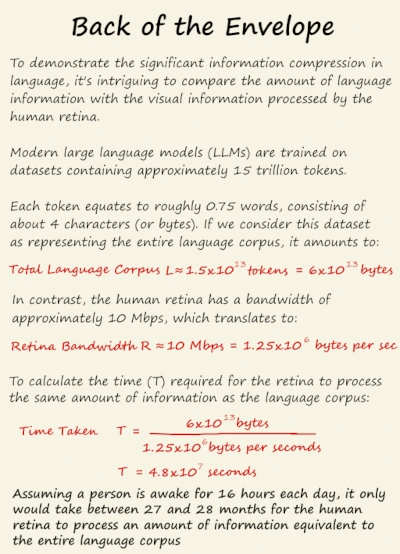

The brilliance of ChatGPT lies in its focus on LLMs as the foundation for generative AI capabilities. Language is universal, used by everyone, and has well–established translations, providing a highly compressed representation of our environment. The Internet serves as an ideal training dataset for LLMs, enabling high-fidelity solutions that leverage the information compression inherent in language, advancements in natural language processing (NLP), and large–scale ANN platforms.

Generative AI and LLM solutions have advanced significantly due to decades of innovation in natural language processing (NLP) and techniques for efficiently scaling ANNs. Key innovations include:

When first launched, OpenAI's ChatGPT solution comprised five key components: two focused on AI training and three on AI inference and rendering.

When first launched, OpenAI's ChatGPT solution comprised five key components: two focused on AI training and three on AI inference and rendering.

Training LLMs like those used in ChatGPT is an expensive endeavor, with costs reaching approximately USD 100 million for models like GPT-4. Despite the high training costs, these models hold significant value. As a result, numerous competitive LLMs have emerged as companies strive to harness this potential. Some prominent examples include Gemini by Google, Llama by Meta, Claude by Anthropic, DBRX by Databricks and Mosaic, and Phi-3 by Microsoft.

Today's LLMs and generative AI rely heavily on vast GPU clusters for both training and substantial GPU resources for subsequent inference and rendering. For instance, the training of GPT–4 required approximately 25,000 NVIDIA A100 GPUs over a period of 90 to 100 days. This intense demand has led to supply challenges for GPUs and has propelled NVIDIA, the pioneer of the GPU, to become the most valuable company globally. The need for high-performance semiconductor technology is driving significant innovation not only by NVIDIA but also by other companies such as AMD, Apple, Cerebras, Intel, IBM, Qualcomm, and Samsung. Additionally, major players in the tech industry, including leading hyper-scalers like AWS, Google, and Microsoft (Azure), are developing their own in-house chipsets. These innovations, though diverse, generally aim to enhance performance capabilities, reduce power consumption, and adapt to evolving demands, such as the increased need for incremental training, model inference, and rendering.

Since AI solutions often demonstrate superhuman capabilities–such as excelling as a Chess World Champion, a Content Editor Extraordinaire, or a Pub Quiz Night Eliminator–there is a tendency to mistakenly generalize their abilities to other, unrelated tasks that humans perform. Experts in the AI industry clearly understand the distinction between the 'Narrow AI' we currently possess and 'General AI,' a future goal that will necessitate fundamentally different methodologies. The journey toward achieving General AI, and even the existence of such a pathway, is a topic of intense debate today and is likely to remain so for the foreseeable future.

AI systems often have significant blind spots, masked by their impressive abilities in specific areas. For example, developing an AI capable of autonomously driving a car is particularly challenging, particularly given that the incorrect interpretation of so-called corner cases can have catastrophic outcomes. In contrast, applying AI to language tasks is relatively easier, as language acts as a universal, highly compressed source of information that is well–represented on the Internet, providing a comprehensive training set.

Currently, we are only witnessing a small fraction of the commercial opportunities for generative AI, both with LLMs and other algorithms, such as advanced diffusion models for images, video and audio. Nonetheless, industry players need to harness the strengths of these technologies while being mindful not to overextend their capabilities, and carefully monitor the significant innovations that are inevitable in the coming years. Nearly every industry worldwide has either adopted or plans to adopt generative AI solutions. Currently, the global generative AI market is valued at USD 60 billion and is projected to surpass USD 1 trillion in the next decade, with an anticipated compound annual growth rate (CAGR) of 30–40%. As this market grows, companies must focus on the capabilities and strategies needed to develop robust solutions. These include:

The stakes are high, with AI likely having a greater societal impact than the Internet. The current enthusiasm for AI shares many characteristics with the dot–com bubble of 2000. Lessons learned from that era provide valuable insights for companies as they craft their AI strategies to ensure they are amongst the next generation of winners who adopt the technology.